Three Ways to Perform Bulk Inserts

Mar 3, 2021 by Robert Gravelle

I recently wrote a node.js script to iterate over millions of files per day and insert their contents into a MySQL database. Rather than process one record at a time, the script stored file contents in memory and then ran an INSERT statement every 1000 files. To do that, I used the bulk insert form of the INSERT statement. Depending on your particular requirements, you may opt to go with a different solution. In today’s blog, we’ll go over a few alternatives.

The INSERT statement supports several syntax variations, one of which is for inserting multiple rows at the same time. To do that, we simply need to enclose each value list in parentheses and separate them using a comma:

INSERT INTO table_name (column_list)

VALUES

(value_list_1),

(value_list_2),

...

(value_list_n);

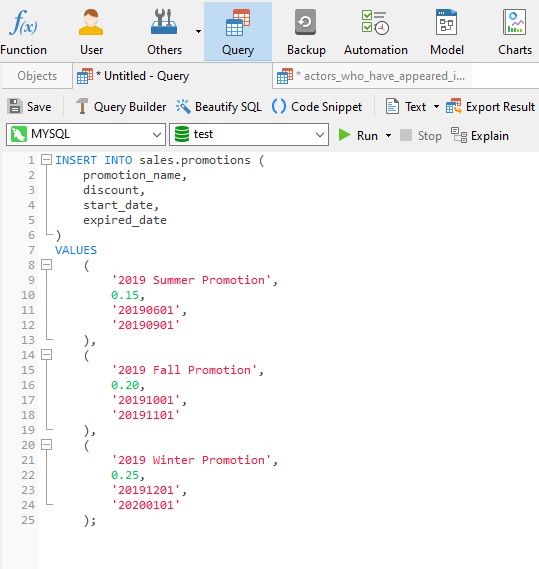

Simple enough. Here’s a sample statement shown in Navicat for MySQL:

While the above statement is formatted for readability, you don’t have to concern yourself with that when generating the SQL dynamically. As long as the syntax is semantically correct, it will work just fine. Finally, note that 1000 is the maximum number of rows that can be inserted at one time using an INSERT statement.

Another option, for those of you who aren’t thrilled about writing scripting code, is to use something like LOAD DATA INFILE. That’s a MySQL-specific command, but most other database systems (DBMS) support something similar. It can import a variety of delimited file formats, including commas (CSV), Tabs (TDV), and others.

Here’s the statement for importing data from the “c:tmpdiscounts.csv” file into the discounts table:

LOAD DATA INFILE 'c:/tmp/discounts.csv' INTO TABLE discounts FIELDS TERMINATED BY ',' ENCLOSED BY '"' LINES TERMINATED BY 'n' IGNORE 1 ROWS;

In the above statement, the IGNORE 1 ROWS option is employed to ignore headers.

I would have liked to have used this method for importing data, but the files that we were importing from utilized a highly specialized and complex format that required a lot of front-end logic.

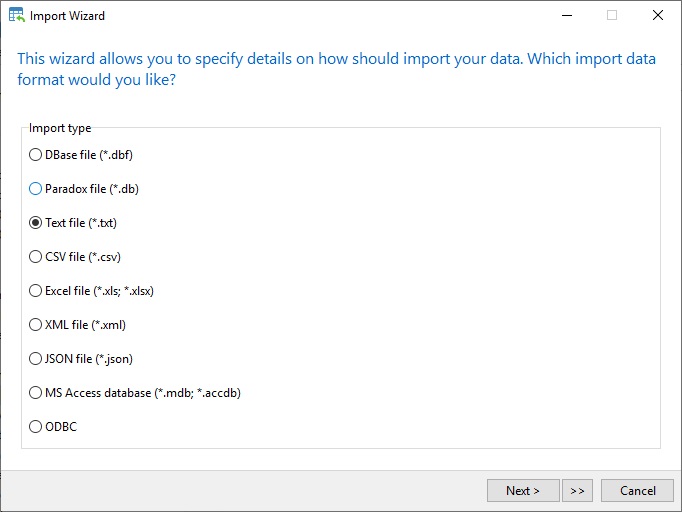

Still another approach would be to use an import utility such as Navicat’s Import Wizard. It supports just about any format that you can imagine, including CSV, Excel, HTML, XML, JSON, and many other formats:

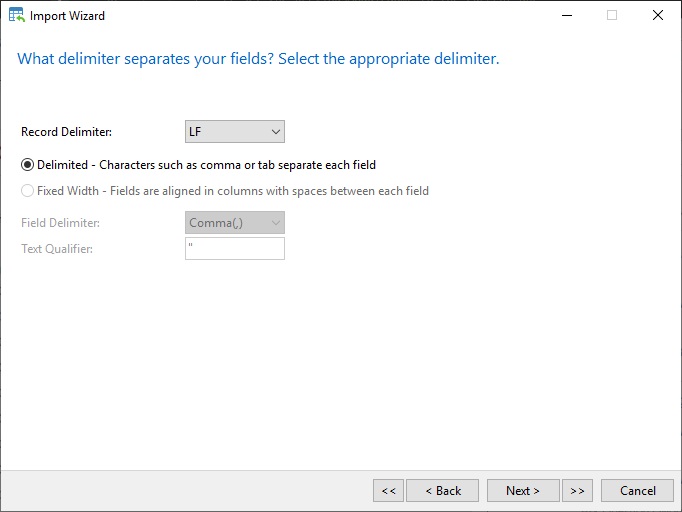

There is a screen for choosing the record delimiter, field delimiter, and text qualifier:

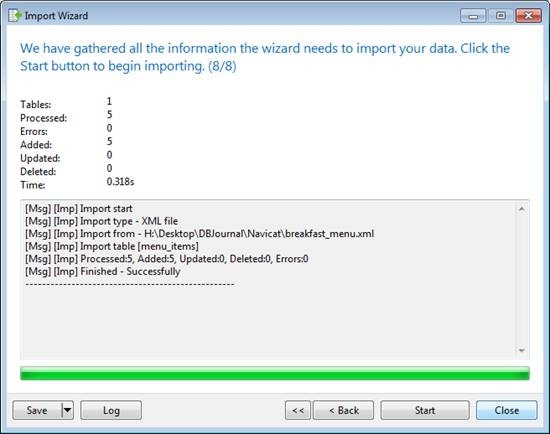

Navicat shows you the progress in real time:

Once your done, you can save all your settings for later use, which is not only useful for running the same on a regular basis, but it also allows you to automate it, so that imports happen without any additional intervention required on your part!

In today’s blog, we covered a few alternatives for performing bulk inserts into MySQL and other DBMS.

Interested in Navicat for MySQL? You can try it for 14 days completely free of charge for evaluation purposes!